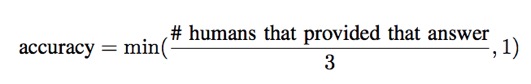

Metric evaluation answering visual question minimum provided answer number vizwiz minus between who

Table of Contents

Table of Contents

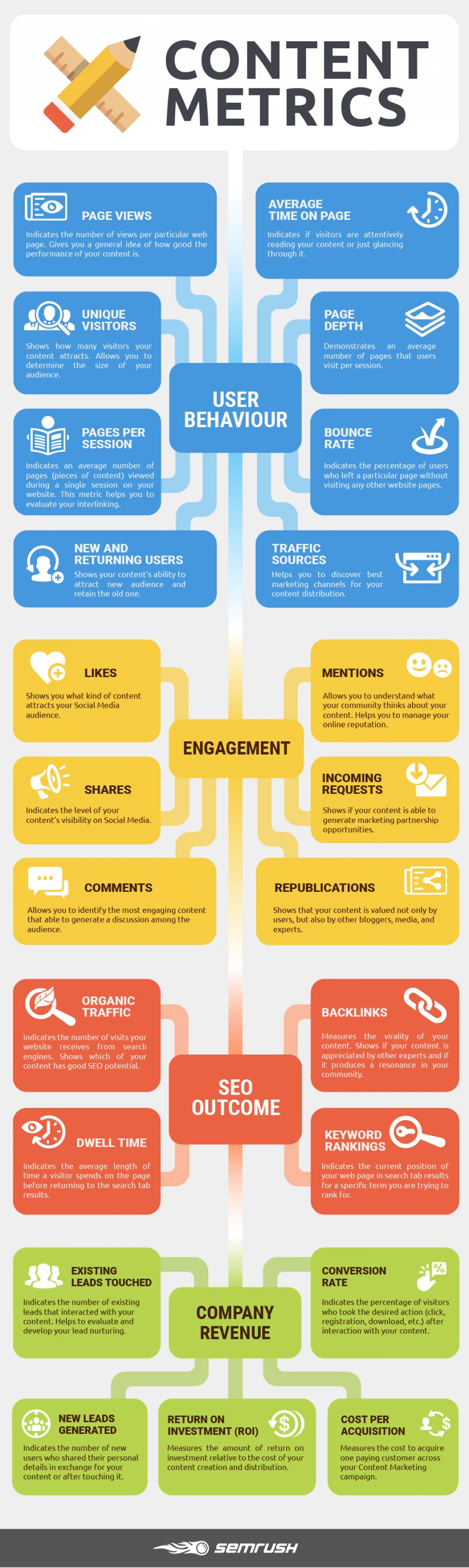

Are you struggling to choose the right evaluation metric for your machine learning model? If so, you’re not alone. Many data scientists and machine learning practitioners find it challenging to select the right evaluation metric for their models. But fear not, we’re here to help!

Pain Points of Choosing an Evaluation Metric

Without the right evaluation metric, it can be challenging to determine how good your model is performing. A bad choice of evaluation metric can lead to misleading results and conclusions. This may cause a delay in the development process and prevent the successful deployment of a machine learning model.

How to Choose an Evaluation Metric?

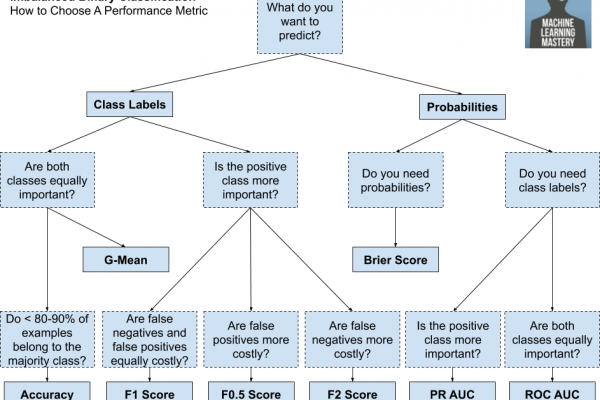

The key to choosing the right evaluation metric is to match the metric to the specific problem you are trying to solve. The first step is to ask the right questions: What is the goal of the model? What are the expected outputs? What are the costs of false positives/negatives? Consider all possible outcomes and how they might affect your business or research question.

Before selecting an evaluation metric, it’s essential to comprehend the different characteristics of a model. Some are more well-suited for binary classification problems, while others are more appropriate for multi-class classification or regression problems. Different evaluation metrics can tell you different things about the model’s performance, so choose wisely.

Summary of Choosing an Evaluation Metric and Related Keywords

Choosing the right evaluation metric for your machine learning model is crucial for achieving accurate results. When selecting an evaluation metric, consider the specific problem you’re solving and the nature of the model. Different evaluation metrics can help you understand various aspects of your model’s performance, so ensure that you have chosen wisely.

The Importance of Accuracy Metric

Accuracy is the most common metric used for classification problems. It shows the percentage of correct predictions made by the model. Despite its importance, accuracy should not be used as the only evaluation metric for machine learning models. The reason for this is that it fails to account for the cost associated with wrong predictions. For instance, in medical diagnosis, a false negative can be more harmful than a false positive.

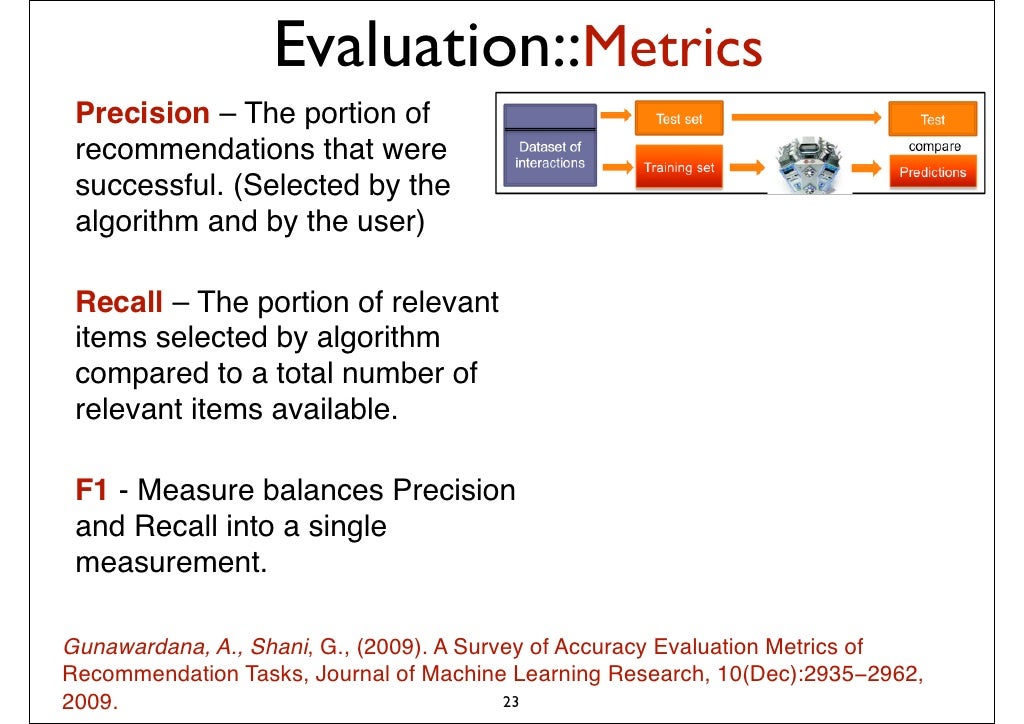

To ensure that your model is robust, other evaluation metrics such as precision, recall, specificity, and F1 score should also be considered. Precision refers to the percentage of accurate positive predictions made by the model, while recall measures the percentage of positive instances that the model has correctly predicted. Specificity refers to the accuracy of negative predictions, while the F1 score measures the balance between precision and recall.

Choosing Evaluation Metrics for Imbalanced Datasets

Model evaluation on imbalanced datasets is often challenging, as the accuracy metric may not reflect the true performance of the model. In such cases, evaluation metrics such as Area Under the ROC Curve (AUC-ROC), Area Under the Precision-Recall Curve (AUC-PRC), or G-mean are commonly used. These metrics provide a better understanding of the model’s performance when dealing with the imbalanced dataset.

Choosing Evaluation Metrics for Regression Models

When working with regression models, the primary performance metric used is the mean absolute error (MAE) or the root mean squared error (RMSE). The MAE measures the average magnitude of the errors in a set of predictions, while the RMSE measures the standard deviation of the errors. Other metrics such as R-squared and coefficient of determination provide a measure of how well the model is performing in terms of explaining variation in the target variable.

Personal Experience with Choosing an Evaluation Metric

During my tenure in the financial sector, I have come across various modeling projects where choosing the right evaluation metric has been challenging. One project that I remember particularly well involved forecasting customer default rates for a credit card business. Initially, we relied only on the accuracy metric, but it failed to take into account the cost of mistakes. Therefore, we had to switch to AUC-ROC evaluation metrics, which provided a better understanding of the model’s performance and led to more accurate predictions.

Question and Answer

Q. Is Accuracy an adequate metric for evaluating classification models?

No, the accuracy metric does not account for the costs of false positives and false negatives. Therefore, other metrics such as precision, recall, specificity, and F1 score should also be considered.

Q. When should we use AUC-ROC in classification models?

AUC-ROC should be used when evaluating classification models on imbalanced datasets. It provides a better understanding of the model’s performance when dealing with the imbalanced dataset.

Q. What is the primary performance metric used in regression models?

The primary performance metrics used in regression models are the mean absolute error (MAE) or the root mean squared error (RMSE).

Q. Is it always necessary to use multiple evaluation metrics?

No, it’s not always necessary to use multiple evaluation metrics. The choice of the evaluation metric depends on the specific problem you are trying to solve.

Conclusion of How to Choose an Evaluation Metric

Choosing the right evaluation metric is crucial for achieving accurate results in machine learning models. It’s essential to select metrics that match the specific problem you are trying to solve and the nature of the model. Evaluating models using multiple metrics provides a better understanding of the model’s performance, leading to more accurate predictions and better business outcomes.

Gallery

Machine Learning Metrics For Classification - Machinei

Photo Credit by: bing.com / metrics evaluation metric problems

Tour Of Evaluation Metrics For Imbalanced Classification – Deep

Photo Credit by: bing.com / metrics imbalanced metric dataset mastery machinelearningmastery unbalanced gan bagging classifier

Evaluation::Metrics Precision – The Portion

Photo Credit by: bing.com / metrics

Visual Question Answering – VizWiz

Photo Credit by: bing.com / metric evaluation answering visual question minimum provided answer number vizwiz minus between who

How To Choose An Evaluation Metric For Imbalanced Classifiers ? | Class

Photo Credit by: bing.com / metric